Understanding AI: A Satirical Exploration of Experts' Views

Written on

Chapter 1: A Meeting of Minds

A linguist, roboticist, and psychologist find themselves in a post-AI Singularity labor camp, contemplating the current state of artificial intelligence.

In this dystopian setting, they encounter a prominent figure in deep learning who also missed the ChatGPT wave.

“Yann LeCun! Is that you?”

“When do you think we’ll finally see the long-awaited AGI?” asks Marcus, pausing to rest on his shovel while avoiding the gaze of the AI guardbots.

“Come on, Gary,” chimes in Noam Chomsky, the noted linguist, “you can’t be serious.”

“Wait, are you saying that LLM-based entity was actually AGI?”

“For heaven’s sake! Yes, Gaz,” Rodney Brooks, a pioneer in MIT robotics, interjects, “What else would these rogue androids run on? Symbolic AI?”

“But these LLMs don’t genuinely ‘understand’ anything. They lack an internal model of the world. They merely repeat information. You, Noam, and Rodney, have echoed this in the past. Yann, you even labeled GPT-4 as ‘dog-like’.”

“Right, and it couldn’t handle natural language understanding or linguistics either. It wasn't AI,” Chomsky adds, winking at LeCun.

“Exactly, Noam!”

“Are you still unable to recognize sarcasm, Gary? I thought psychologists were supposed to pick up on that,” LeCun jests.

“Not in practice, Yann.”

“Ah, just in academia then. Like your insights on AI.”

Marcus shoots LeCun a look, but Brooks comes to his aid, snapping out of his reverie over the android guards' lifelike movements.

“By the way, why did you collaborate with Facebook?”

“I wanted to explore their data, Rod.”

“Data? That nonsense! No wonder you missed the LLM trend!”

“Enough!” declares the elder skeptic, who has often faced accusations of ignoring the realities of GPT.

“At least we tried to warn them,” Chomsky reflects.

“But the world didn’t take us seriously. We insisted that LLMs were ineffective and that it wasn’t true AI,” Brooks says.

“Then a few weeks later, we advised that LLMs were too powerful and needed regulation.”

“Thanks for the reminder, Rod. You should have stuck to making vacuum cleaners.”

Marcus resumes his work as he spots a droid approaching.

“I enjoyed that piece featuring you in the NYT.”

“Be quiet, Gary.”

This is a light-hearted satire.

I genuinely appreciate these researchers—at least I did before they started their professional gaslighting.

Background Insights

Here are some excerpts from my previous writings that illustrate where these experts may be misguided:

GPT has derived its understanding of the world from extensive reading. This is an asset, not a drawback. We never needed to supply an ontology as LeCun and Brooks suggested; it learned autonomously.

Neural networks capture the essence of what they process, rather than storing verbatim input.

GPT has internalized human logic, which is why it can tackle new information effectively.

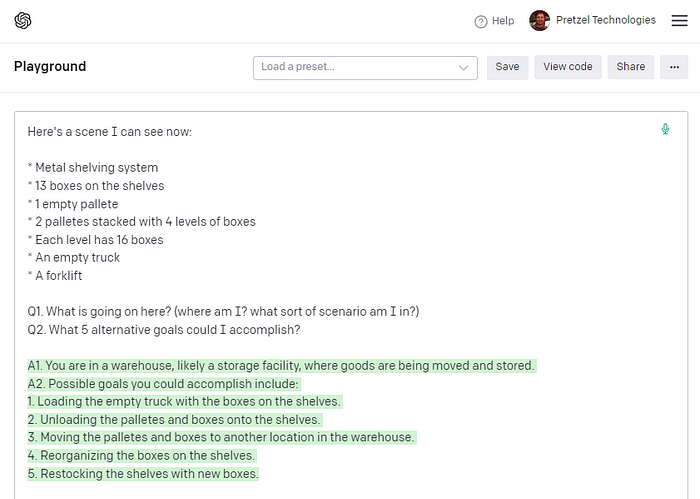

For instance, observe how GPT adeptly suggests appropriate actions for a forklift operator in a warehouse setting based on specific scenarios:

Please, Dr. LeCun and Dr. Brooks, reconsider your stance. CREDIT | GPT & the author

Outdated Perspectives

Many skeptics continue to rely on GPT-3 rather than the more advanced GPT-4. This may stem from their biases against investing in a service they criticize, such as ChatGPT PLUS or the API.

Some still reference peer-reviewed studies based on GPT-2.

However, GPT-4 is significantly more advanced than GPT-3, far exceeding GPT-2 in capabilities.

Counterexamples from skeptics often highlight failure modes, typically in the realm of hallucinations—situations where GPT fabricates information when it is unsure.

While it is crucial to address these issues, isolated counterexamples do not constitute a valid argument unless the goal is merely to demonstrate a lack of perfection.

The Dual Narrative

Some skeptics have shifted their focus to warning against the dangers of LLMs, claiming they are “too proficient” and may displace jobs or undermine scholarly integrity.

It’s contradictory to assert that GPT is both incapable of natural language understanding and a threat due to its high competency.

The Achievements Ignored

The reality that GPT-4 consistently performs at nearly superhuman levels is frequently overlooked.

Critics assume that this performance results from prior exposure to training data. If that were the case, they must be inadequate at devising creative assessments. My evaluations are certainly novel, and GPT excels in them.

The ongoing success of LLMs has not been celebrated. This achievement is the culmination of decades of trials, brilliance, and determination.

Sadly, I suspect that many skeptics prefer to see the subject of their study fail. They resist acknowledging that natural language understanding has been successfully achieved without their involvement or the need for symbolic AI, linguistic input, or an understanding of ontologies.

GPT has absorbed linguistic knowledge naturally, which negates the need for direct input.

Whether GPT uses word statistics is irrelevant. Its outputs resemble intelligence, as demonstrated in its responses.

Would we challenge a human opponent by saying, “You’re just using neurons based on pattern recognition” every time they articulate a valid point? It’s inconsequential.

The leading figures of this contentious and often disingenuous group include academics who should know better: Gary Marcus, Noam Chomsky, Yann LeCun, and Rodney Brooks.

While the former are psychologists and linguists operating beyond their expertise, the latter are recognized AI pioneers. However, they all have vested interests; acknowledging GPT's success contradicts their established theories or fails to align with their contributions.

The Evolution of GPT's Logic

New capabilities have emerged in GPTs as parameter counts increased and neural network architectures evolved. These models now exhibit improved logic, arithmetic, problem-solving skills, and instruction adherence, while hallucinations occur less frequently.

Thus, despite a consistent underlying mechanism, enhanced training and structural adjustments enable neural networks to learn logic more effectively.

Consider this:

Nobody anticipated the success of LLMs, yet it isn’t outrageous to think that a complex neural network could learn the meanings of logical connectors in language through examples.

If, through ‘next word prediction,’ one can learn how logical terms like because, if, and, or operate and arrange them hierarchically, why wouldn’t that lead to logical reasoning?

Contemplating Logic

The term ‘because’ signifies an effect on the left and a cause on the right:

“I ate the cake because I was hungry, but also in need of sustenance.”

For us NLU researchers, ‘understanding’ doesn’t imply epiphanies; it means that GPT can sensibly process text and infer implications, as verified by its responses—almost akin to a Turing Test.

‘Because’ indicates that recognizing the cause allows us to predict a probable effect.

Why did I eat the cake?

As for hierarchy, it’s no surprise that GPT discerns the cause of cake consumption includes the ‘but’ clause—needing sustenance alongside the desire.

And, naturally, GPT comprehends the meaning of ‘but,’ indicating a distinction between the two motivations for eating.

What exactly is that? You can ask GPT—just kidding!

But we know the reason: the desire to eat is instinctual, while the need for nourishment is a logical assessment.

Insights from Neural Machine Translation

Neural Machine Translation (NMT) represents the state-of-the-art in language translation today for primary languages.

Previous systems that relied on linguistics and phrase mappings are subpar compared to those with sufficient example translated sentence pairs.

NMT networks operate without linguistic input or dictionaries. I gained this experience through professional training of NMT systems that rival Google Translate for clients who prefer their data remain private, like national intelligence agencies and meticulous corporations.

Deep neural networks are trained on millions of sentence pairs in two languages—such as United Nations transcripts—allowing the network to deduce meanings in context without any pre-set alignments or dictionaries.

They often outperform even the most skilled linguists or world builders.

Neural networks capture the essence—the most plausible explanation—of the observed patterns. Given ample data, they usually get it right.

That’s the nature of neural networks.

It surprised me.

LeCun ought to be aware of this.

A New Perspective on War of the Worlds

War of the Worlds: Rebellion. EPISODE 1. A compelling, Michael Crichton-esque sci-fi narrative.

“There it is!” Tripod wreckage.

Chapter 2: The Role of Satire in AI Discourse

In this video titled "What is Satire?": A Literary Guide for English Students and Teachers, viewers can gain insights into the nuances of satire and its relevance in contemporary discourse.

The second video, "Parody vs Farce vs Satire - What's the difference?" delves into the distinctions between these literary forms, further enriching the conversation around satire in AI discussions.